Per @karpathy's recommendation, I thought I spent a few hours learning Streamlit.

See code on leeknowlton/streamlit-exploration.

Basic Installation

Note: See the Streamlit docs for more comprehensive tutorials. These are just quick, rough notes.

-

If you’ve already set up the project, navigate to the folder and run

source .venv/bin/activateto reactivate your virtual environment. -

Create a new virtual environment

python3 -m venv .venv -

Install the streamlit package

pip3 install streamlit -

Run the demo app

streamlit hello -

Voilà!

Basic tables and charts

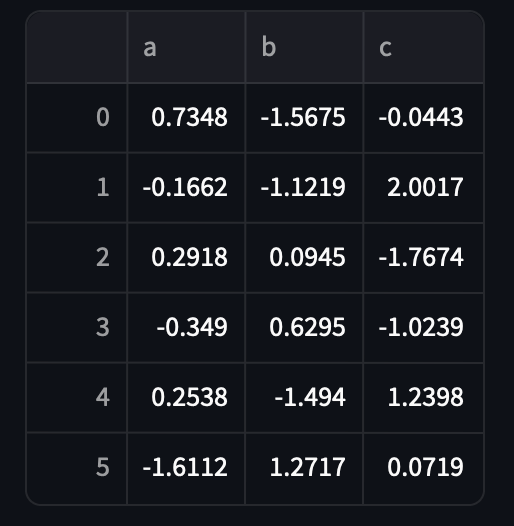

- Using numpy to create a table with random numbers

import streamlit as st

import numpy as np

data = pd.DataFrame(np.random.randn(6, 3), columns=["a", "b", "c"])

- Visualizing the table

st.write(data)

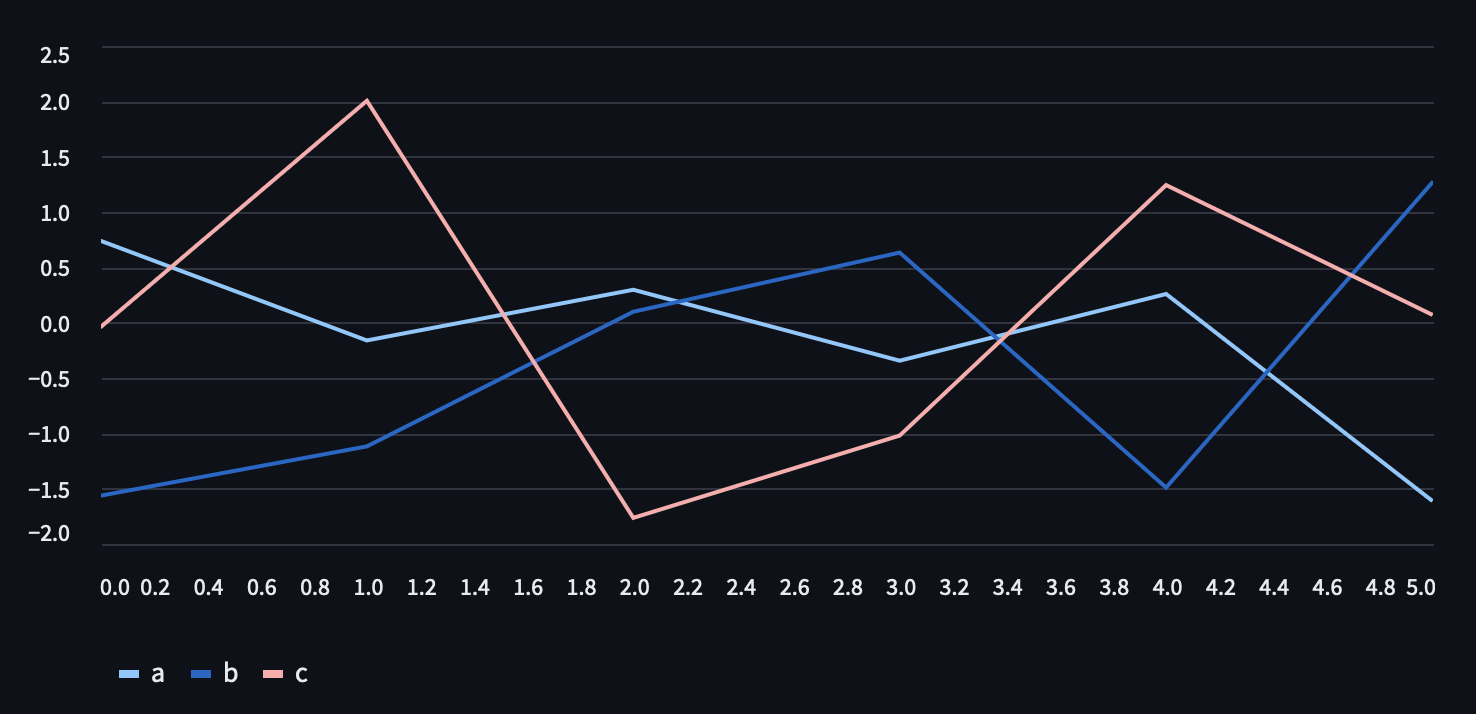

- A first attempt at adding a line chart

st.line_chart(data)

A Simple Agent Frontend

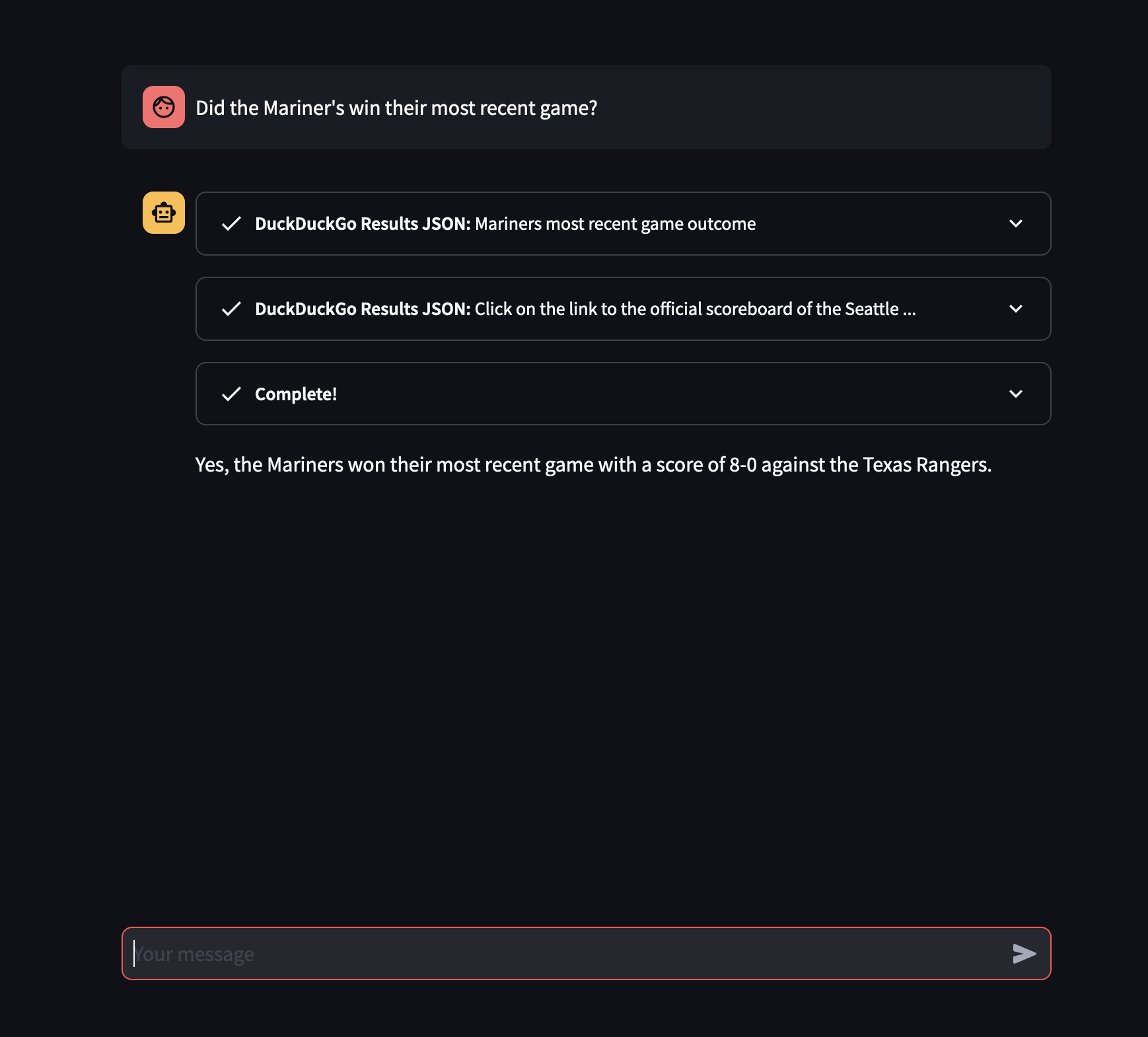

Before wrapping up, I created a simple AI agent front-end that used DuckDuckGo search as a tool.

import os

import streamlit as st

from langchain.chat_models import ChatOpenAI

from langchain.agents import AgentType, initialize_agent

from langchain.tools import DuckDuckGoSearchResults

from langchain.callbacks import StreamlitCallbackHandler

from langchain.chat_models import ChatOpenAI

from langchain.tools import Tool

from dotenv import load_dotenv

load_dotenv()

openai_api_key = os.getenv("OPENAI_API_KEY")

search = DuckDuckGoSearchResults()

llm = ChatOpenAI(temperature=0, streaming=True)

tools = [

Tool(

name="Search",

func=search.run,

description="useful for when you need to answer questions about current events",

),

]

agent = initialize_agent(

tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)

if prompt := st.chat_input():

st.chat_message("user").write(prompt)

with st.chat_message("assistant"):

st_callback = StreamlitCallbackHandler(st.container())

response = agent.run(prompt, callbacks=[st_callback])

st.write(response)