LLMs sometimes give inaccurate, inconsistent, or unhelpful responses because they don’t have access to the right information.

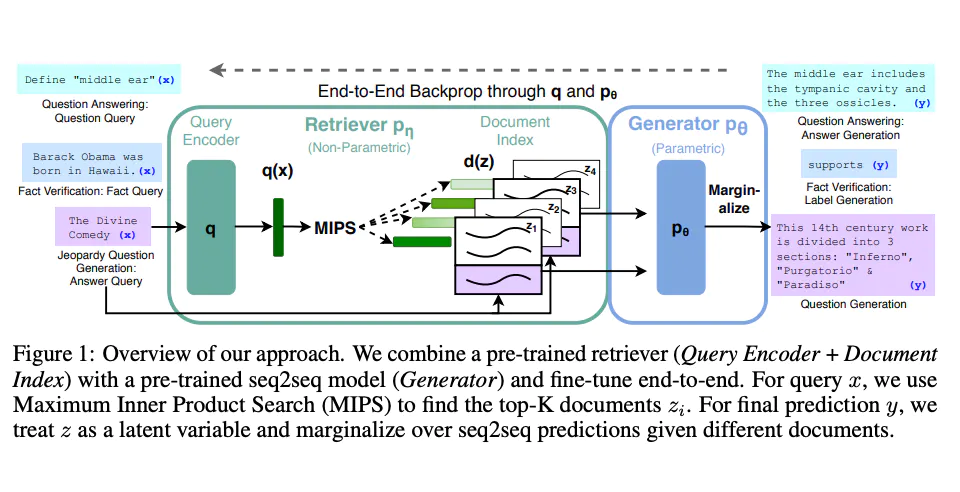

To overcome this particular limitation, a technique called Retrieval Augmented Generation (RAG) was proposed by Lewis et al. (2020).

RAG works as follows:

- Given an input, such as a question or a prompt, RAG uses an information retrieval (IR) system to retrieve a set of relevant documents or passages that may contain useful information for generating the response.

- RAG then concatenates the input with the retrieved documents or passages, and feeds them to an LLM, which generates the response based on the combined input.

Image Source: Lewis et el. (2021)

Image Source: Lewis et el. (2021)

This marriage of capabilities in RAG offers some compelling strengths:

- They can access and incorporate external knowledge from the IR system, which can improve the factual accuracy and specificity of the responses.

- They can adapt and update their knowledge based on the latest information from the IR system, which can improve the timeliness and reliability of the responses.

- They can reduce the risk of hallucination by using the retrieved documents or passages as evidence or support for the responses.

RAG has been shown to perform well on various natural language generation tasks, such as question answering, fact verification, and text summarization. RAG can also be used to enhance conversational AI systems, such as chatbots, by enabling them to retrieve and use relevant information from a large knowledge base, such as Wikipedia, to generate more informative and engaging responses.

Further Information and Tutorials

- Making Retrieval Augmented Generation Better

- RAG by HuggingFace

- Chatbots with RAG: LangChain Full Walkthrough