I used to think I understood entropy.

I’d imagine a big cluster of balls breaking up into smaller ones.

Entropy was the force, I thought, or at least this tendency, that made bigger things turn into a greater number of smaller things.

This, it turns out, was wrong.

Entropy is not the chaotic force I was imagining. It isn’t about things falling apart or spreading out.

Fixing Misconceptions

Before I could grasp was entropy was, I had to figure out where I had gone astray. These three facts helped me break apart my previous misconceptions and set a better foundation for understanding.

-

Entropy is often used as shorthand for the second law of thermodynamics. Entropy, as I had imagined it, is actually a colloquial shorthand usage for the second law of thermodynamics (i.e., entropy of an isolated system never decreases). This is NOT the strict definition of entropy itself, but we tend two conflate entropy with the second law since they always are introduced together.

-

Entropy is a metric, not a force or tendency. Strictly speaking, entropy is a metric, like length or mass. It’s not a natural force like gravity or even a tendency.

-

There’s no easy physical analogy. Entropy isn’t a physical property. It measures a statistical likelihood, which means you can’t measure it directly with a tool (unlike length or mass).

So, what actually is entropy?

Here’s a definition:

Entropy is a measure of how many possible arrangements, or microstates, within a system correspond to the same overall condition, or macrostate.

Understanding Entropy using Dice

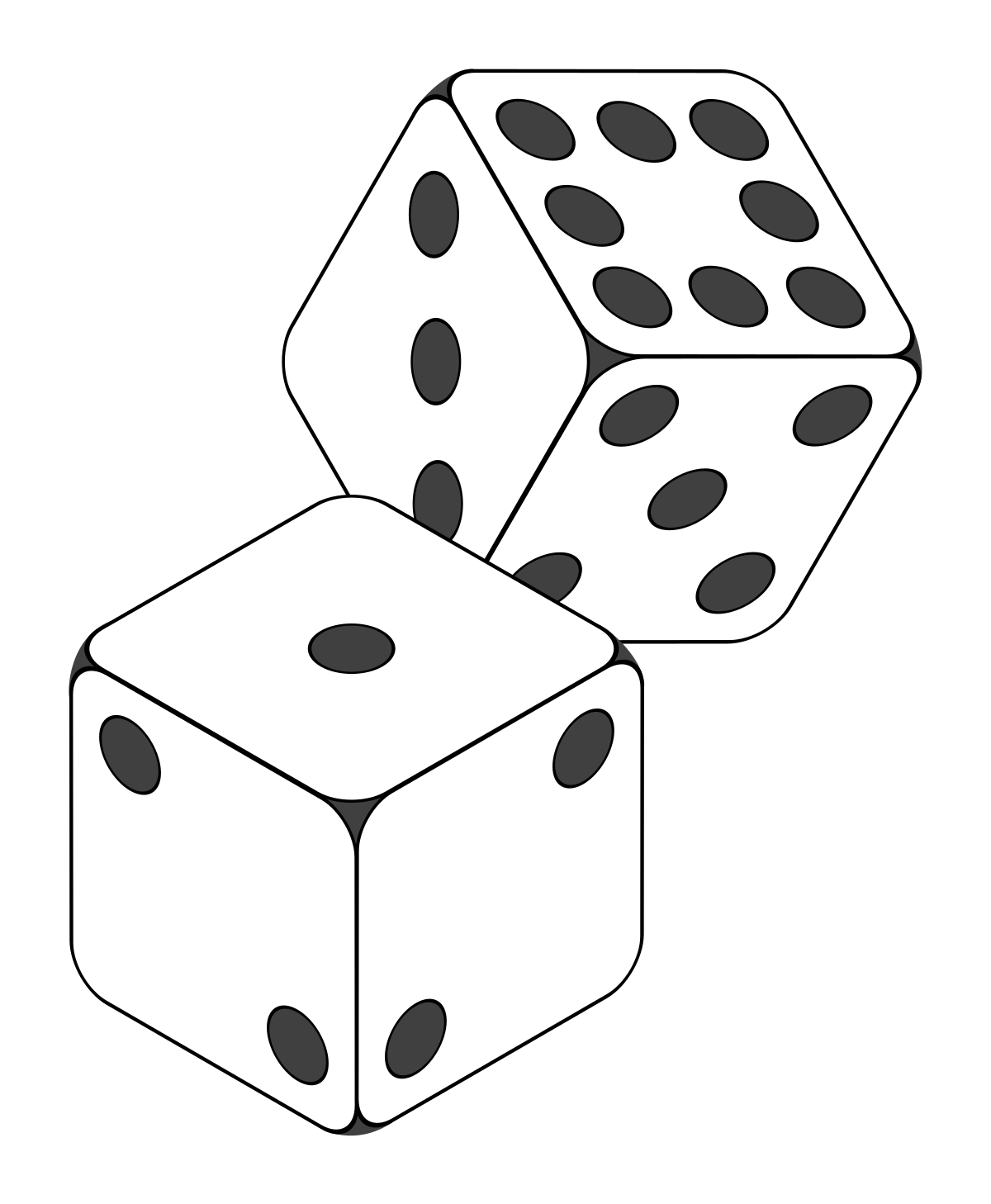

Take two six-sided dice. Roll them, and let’s say they land on a one each.

The macrostate is the sum: two.

The microstate is the specific combination of the dice: (1,1).

Only one microstate exists for this macrostate—low entropy.

If the dice sum to seven, there are six possible microstates: (1,6), (2,5), (3,4), and so on. That’s higher entropy—more microstates for the same macrostate.

Entropy and Uncertainty

Entropy is a way to quantify uncertainty. If you only know the macrostate (the sum of the dice), a low entropy (like a sum of two) means you can be quite certain about the microstates (the dice must be (1,1)). But with a higher entropy (a sum of seven), there’s more uncertainty about the microstate.

This dice analogy is a vast simplification, of course. Real-world systems have a much higher number of particles, each with many possible states.

The core principle, however, remains: entropy measures the number of ways a system can be arranged without changing its overall appearance.